“Imagine a comm system that’s nearly unjammable… Actually, you don’t have to imagine. XXX system built one, TRL 5, and proven in Ukraine. Currently in a funding round. DM me if interested.” I squinted with my 5:30 AM un-caffeinated eyes at the cellphone screen and tried to unpack this Unverified Bodacious Assertion (UBA).

TRL 5 usually means a component has been tested in the lab under partially relevant environment conditions. But if something is “proven in Ukraine” that would seem to imply it is “flight proven” and had been used downrange to kill something. That’s usually an instant check mark for TRL9, as Figure 1 shows.

Or here’s another banger from a press release of a unnamed company: “With over 1,000 hours of TRL-9 operation, XXX has demonstrated world-class range against targets…as well as other cutting-edge capabilities like anti-jamming technology.” For a surveillance system, that’s a whopping 6 weeks of continuous use - barely enough time to really figure out if things are working or not - and I doubt the anti-jamming was tested over the full envelope of employment either (short range, long range, etc).

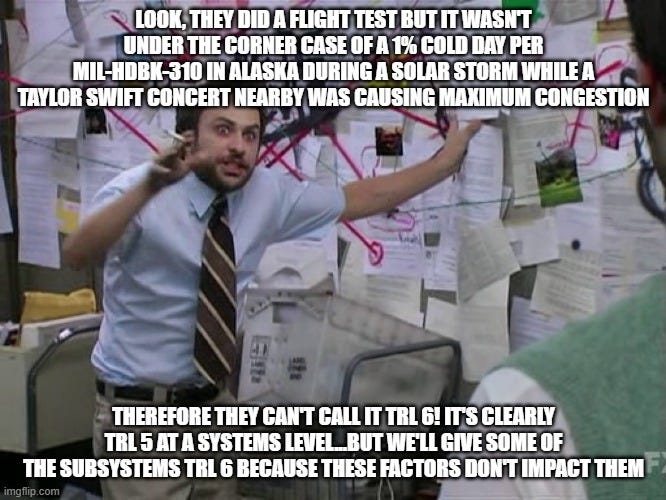

So…are these examples TRL 9 or TRL 5 or TRL 6 or what? You’d be surprised how many different opinions you’d get out of a room of “experts” on this topic. The TRL process has been a quagmire as long as I’ve been in the industry: it’s an attempt to apply a hard quantitative number and criteria to something that’s by it’s very nature highly subjective and a lot of judgement calls. In this respect its very similar to other subjective industry measures such as Quality Functional Deployment, or the House of Quality (also the name of my future Six-Sigma themed restaurant).

When everything was cost plus, satellites cost billions of dollars and took many years to build, and launch was a perilous endeavor, maybe a robust TRL system, guided by a robust waterfall engineering process with this many steps made sense. But in an era where deployment schedules are weeks long, changes are being made to systems 6x a day thanks to the advent of 3D printing of hardware and Continuous Integration/Development (CI/CD) of software, and launch vehicle failure rates are <0.3% , perhaps the TRL definitions and process for appraising them have become OBE (Overcome by Events)?

History of TRLs

TRLs were developed by NASA in the 1970s as a way to manage complex space projects and understanding the technical risk that was being undertaken. Originally there were only 7 TRLs but in 1989, TRL 6-9 were elaborated upon to further flush out what paces a system should be put through on orbit. Since then they’ve been adopted by every branch of the military and they’ve put their own unique twist on it (e.g. the navy takes out “flight” test as a criteria because boats don’t fly). This system was in many ways an outgrowth of the times it was developed in: NASA had endured a series of failures in the 60s, 70s and 80s (as I’ve talked about before here) that left them with dead astronauts and failed missions and was looking for a way to manage and reduce obvious risks, like developing and introducing new technologies.

However, straight forward process and simple, if not properly constrained, inevitably becomes a vehicle unto itself for bureaucratic bloat. It becomes a jobs program for the managerial class. TRLs were no different and alongside the TRL system a bureaucratic morass known as the Technology Readiness Assessment process has developed, with its own 98 page guidebook and extremely prescriptive definitions for all these technologies. This would all be funny if it weren’t for the fact that these processes and satisfying their abstract requirements have become a cottage industry largely unfocused on the ultimate goal: delivering cutting edge systems to the customer as quickly, affordably and reliably as possible.

For those that don’t know, no process is sufficient.

For those that do know, no process is required.

- random brain dropping from an engineering fellow’s whiteboard circa 2011

The process for formally defining the TRL of a system is rather straight forward: the product is assessed and all technologies to be used are divided into Critical Technology Elements (CTEs) typically at the Subsystem level, but sometimes at the component level. For instance, if there is a new type of rocket engine, it’s almost certainly considered a CTE. If it’s a missile seeker, it’s possible the whole seeker could be considered a CTE or perhaps one component that is very new, like a high power amplifier made from a novel material like Gallium Nitride (GaN), is considered a CTE. Those considered immature (typically less than TRL8) The contractor is then required to develop a series of “Tech Maturation Plans” which take each of these CTEs from whatever TRL they started with to at least the minimum of TRL 6 (system test completed in a relevant environment) required to get into production.

For major programs like new missile buys, a new submarine or a fighter jet, the systems engineering and program management team at the contractor and it’s subcontractors will typically spend 6-24 months (and millions in billable hours) fighting back and forth through a series of working groups with various “experts” in the Systems Program Office (SPO) and their supporting Systems Engineering and Technical Assistance (SETA) contractors on the specific definitions of the CTEs and their associated tech maturation plans.

Because the SETAs are usually awarded a contract to the SPO proportional to the size of the contract in the next phase, the SETAs are somewhat incentivized to make this as complex as possible to maximize their required support. The type of folks that gravitate towards SETA work tend to be the kind (read bureaucratically minded) who lean towards more complex processes which they believe are more likely to minimize “escapes”. Making matters even worse, the SETA has no authority (that still rests with the program office and its contract officers) but can endlessly pontificate, sandbag, pout and otherwise suck the oxygen out of the room until they get their way on these things because they are “the experts”.

“The bureaucratic mind has two needs: to achieve the financial goal set for it, and to keep being employed. With employment comes a pension, perks, status, a title and the chance to move up in the organization.” - Dr. John R Taylor, Canadian Journal of Plastic Surgery, 2009

Most contract officers, who are incentivized to minimize risk up until the very point they’ve blown their budget (no one gets promoted for blowing up a rocket early and under budget), will defer to the SETAs they are paying for valuable “advice”. Since most of these contracts are Cost Plus Incentive Fee, the contractor is also incentivized to make them more complex at the corporate level, even if it’s frustrating to the engineers working on it who just want to push a product out the door and see it work.

To make matters even worse, incremental contract milestones are often tied to the tech maturation plans, with maximum incentive to have as many steps as possible so billing can occur as front loaded and incrementally as possible. This fits with the “legacy prime” or cost plus mindset of getting paid by a customer to product paper rather than product.

From a taxpayer perspective, the Tech Maturation Plans all too often minimize risk BUT they do so by maximizing the time to field a product and thereby the cost - marching army costs on these programs can run in the millions per day and all too often the critical path is paced by tech maturation plans. As Elon is fond of saying “the cost of time outweighs the cost of cost.”

My own Tech Readiness Assessment experience

I’ve briefed or helped prepare briefings for at least four technical readiness boards (TRBs) - a requirement for any major defense acquisition program (MDAP - >$500M in RDT&E or $3B in procurement) to move beyond technical demonstration (TD) prototyping to Engineering and Manufacturing Development (EMD) - something referred to in the DOD organizational bible known as JCIDS Milestone B.

In the case where I was the main briefer, this involved a full coat and tie all day briefing in July in front of a “murder board” of 40 so-called subject matter experts. The TRB panel members ranged from former fighter squadron commanders, to former DARPA PMs, to a retired brigadier general to a number of SETAs to test pilots. Impeccable credentials!

So I spent 45 minutes (x2) briefing the tech maturation plans we had already performed or intended to perform for our particular CTEs. My management ensured that my talking points were so rehearsed I sounded like a politician answering an uncomfortable question. Since TRL is much more of an art than a precise science and every test wicket and subsystem is different, the TRB panel members all came with their own pet rocks and agendas. Since the risk level of a team’s offering going forward - a key criteria for how a Request for Proposal (RFP) is scored - was heavily influenced by the TRB’s finding, careers were made or broken based on what happened in this briefing and if you could tell a story you were TRL 6 or not.

Of course, so I thought. As if to amplify the absurdity of all this the executive in charge of my program team literally fell asleep and started snoring loudly in the front row halfway through my briefing - highly distracting when dodging pet rocks being thrown at you. His junior executive just waved it off and gestured at me to continued. Well at least I know he’s not worried about me.

After 9 hours of briefings the TRB then met to individually vote on what they thought the true TRL was of all our CTEs. Having gotten to see the tabulation afterwards, I found this result highly amusing. For some (usually less political) CTE they were remarkably consistent and 90% of the results were the same or at most +/- 1 from each other. For others, the results varied as widely as 3-8 in some cases, with the personal agendas of the reviewers again creeping in. It was clearly that the direction to run an “objective” process was far from it.

Needless to say, the briefer with the widely varying (and not surprising lower TRL) result found himself getting a lot of extra “help” he didn’t ask for. I’m sure if we had run the same process again on a different day of the week with a different set of reviewers, the results would have been entirely different.

I don’t blame the reviewers: I blame a process that had become too bureaucratic and complicated (and for that matter slow and expensive) to get a repeatable and reliable result. If this was being done for private dollars rather than to satisfy a bureaucratic wicket tied to a payment milestone, this never would have happened. The systems would have been built and integrated as quickly as possible and proof of technical maturity would have been based on the most rigorous real world test event possible to get to as fast as possible.

My experience was the rule rather than the exception when it comes to TRBs: Cost Plus program managers love these long drawn out tech maturation plans because they are filled with lots of events and meetings that are easily to estimate and get paid for. Engineers hate them because they create bureaucratic gates that slow down the work and their ultimate goal of delivering a product as fast as possible.

How all these steps no longer make sense

There are several reasons why the TRL scale no longer make sense. Building hardware today, thanks to technical innovations like reprogrammable ICs (FPGAs, GPUs), 3D printers, rapid simulation tools and CAD/CAM techniques, has never been easier. It’s about as hard to build hardware today as it was to write software when I started my career 20 years ago. Software is even easier: nowadays there are so many libraries, open source tools and APIs, not to mention AI copilots that are just starting to see widespread adoption, that your average software engineer is 10x more productive than they were then. So integration and maturation steps that before were painful and filled with risk are easily bypassed in favor of getting to a testable or flyable prototype.

It’s about as hard to build hardware today as it was to develop software when I started my career 20 years ago. Software is even easier: nowadays your average software engineer 10x more productive than they were 20 years ago.

The way that we design complex systems has also changed: the days of over-characterization and spec’ing systems to the particle level using the perfectly prescribed “Systems Engineering V” or waterfall development shown in Figure 2 has been replaced by Agile software and hardware engineering processes with rapid prototyping and iteration in mind. Failure is now accepted and embraced as part of the process!

“Mission Assurance”, the mantra I heard constantly during the first 5 years of my career has given way to “Elon’s algorithm”:

Ironically, we got rid of more quality processes and the rockets got more reliable!

All this being said, we almost certainly still need some simple lightweight metrics for abstracting the technical maturity and manufacturing maturity of systems.

Software Acquisition Framework - slayer of Software TRL?

The absurdity of the TRLs as they exist today is perhaps most obvious in software. Looking at Table 1 above from the perspective of a modern software engineer, 1-3 are basically one step (who writes algorithm description documents anymore?), 4-5 are basically one step, 6 is basically the alpha, 7 the beta, 8 is pilot and 9 is full release. I know for a fact however that there are R&D groups at various primes still operating within this framework — and getting paid cost plus dollars to do so.

The “systems engineering V” that is the northern star for the TRL rubric presumes a linear process of development for software, culminating in a Formal Qualification Test (FQT) in compliance MIL-STD-498. MIL-STD-498 (first published in 1994) was a pretty rigorous spec meant to ensure that the Operational Flight Program (OFP) or software stack for a system was safe to put on safety critical hardware. A two years gapfiller intended to holdover until commercial standards caught up is still in use in use today. It was written in an era of “spaghetti code” — Object oriented programming was still relatively new, MIPs of processing and Megabytes of memory were scarce resources and writing too many lines of code before hitting the compile button was something you did at your own peril. Today’s commercial software is highly containerized, heavily leveraging APIs and higher order languages with more automatic checking features (and now AI agents to write the code for you), is in many ways nothing like this, but yet we still build many of our largest aerospace systems to comply with these standards.

MIL-STD-498 mandates a rigid "big-bang" testing approach, where any software change triggers full-scale requalification due to strict document control, traceability, and waterfall-based processes. As Table 2 shows, is that it basically required an FQT every time you made even minor changes to the OFP. This makes even minor updates expensive and has condemned many DoD systems to software feature updates on a biennial basis (or worse). In an era where the Ukrainian drones are using commercial Continuous Integration/Continuous Delivery (CI/CD) processes to push software updates to drone fleets six or more times a day, this is entirely unacceptable.

In 2020, the DoD introduced the Software Acquisition Framework (SAF) shown in Figure 4 which greatly simplified the process of building software - embracing commercial agile development. One major change is acceptance of the reality that software is now an asset that must be continuously cared for and fed rather than something that you write once and only infrequently change afterwards.

Initially limited to just application software, the SAF has now been expanded to include embedded software as well. It was recently revealed that under SecDef Hesgeth, DoD intends to go even further and effectively mandate the Software Acquisition Pathway (SWP) for all software moving forward. Part of this is not just about speeding this up, but the mandate is to make greater use of commercial solution openings and Other Transactions (OT/OTAs) as the default rather than the exceptions. This puts the onus back on industry to deliver a product as requested (design/build) rather than the traditional time consuming process with massive oversight (design/bid/build).

So with the waterfall approach on it’s way out, it’s unclear to me if the software TRLs that went along with it have much of any utility anymore, except for marketing like the UBAs I started this article with.

What do we replace TRLs with?

So the question is what do we replace all this with?

I have wracked my brain for a little while now on what an appropriate replacement is, in particular from observing our team at CX2 and how they operate and mature our products. I’ve come to the realization that hardware, software and the system really don’t have a real 1:1 mapping anymore. The ease with which you can set up simulations due to commercial tools and rapidly build up hardware in the loop tests these days for most systems really changes the game.

I also think we need to recognize that 40+ years of building start ups has given us a really good nomenclature in the form of “pilot implementations” that we should embrace as defense nomenclature as well. Given that a pilot system in customer evaluation is almost a gold standard for Series A funding for all but the most deep tech of systems, it seems reasonable to me that we should embrace this for the TRLs as well.

Tying this back to the two examples I started this diatribe with, both of them would clearly fall under “Pilot implementation” by this definition with very little ambiguity — limited use by a customer in a real world context.

Wrapping up

To quote Bob Dylan, the times they are a changin’. The ease with which rapid prototyping and fielding can take place, perhaps best exemplified by the rapid pace of UAS and CUAS development by both sides in the Ukraine war, has made us question almost sacred processes for building systems. They have probably been obsolete for a while and we didn’t even notice because we saw no burning need to change. The TRL scale is just one example of something we need to get away from as we move to a more commercial contractor, fixed price, rapid model of procurement where time of fielding is a critical metric and not an independent variable.

I’ve taken my stab at a refresh of the TRL scale that I think makes more sense for 2025. Hopefully someone with more expertise (and more time on their hands) will try to iterate and improve upon it so that we have something much more useful and can quit trying to call everything TRL 9 when it isn’t.